39 soft labels machine learning

Labeling images and text documents - Azure Machine Learning Sign in to Azure Machine Learning studio. Select the subscription and the workspace that contains the labeling project. Get this information from your project administrator. Depending on your access level, you may see multiple sections on the left. If so, select Data labeling on the left-hand side to find the project. Understand the labeling task A radical new technique lets AI learn with practically no data "Soft labels try to capture these shared features. So instead of telling the machine, 'This image is the digit 3,' we say, 'This image is 60% the digit 3, 30% the digit 8, and 10% the ...

PDF Efficient Learning with Soft Label Information and Multiple Annotators Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS

Soft labels machine learning

Learning Soft Labels via Meta Learning - Apple Machine Learning Research Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Is it okay to use cross entropy loss function with soft labels? The sum is taken over the set of possible class labels. In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies. What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Soft labels machine learning. How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning. [2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Labelling Images - 15 Best Annotation Tools in 2022 For this purpose, the best machine learning as a service and image processing service is offered by Folio3 and is highly recommended by many. ... Its algorithm-based automation features include a pre-labeling feature that pre-labels image data using an existing machine learning (ML) model. Label Studio also has a vibrant user base and an active ... Data Labeling Software: Best Tools for Data Labeling - Neptune In machine learning and AI development, the aspects of data labeling are essential. You need a structured set of training data that an ML system can learn from. It takes a lot of effort to create accurately labeled datasets. Data labeling tools come very much in handy because they can automate the labeling process, which […]

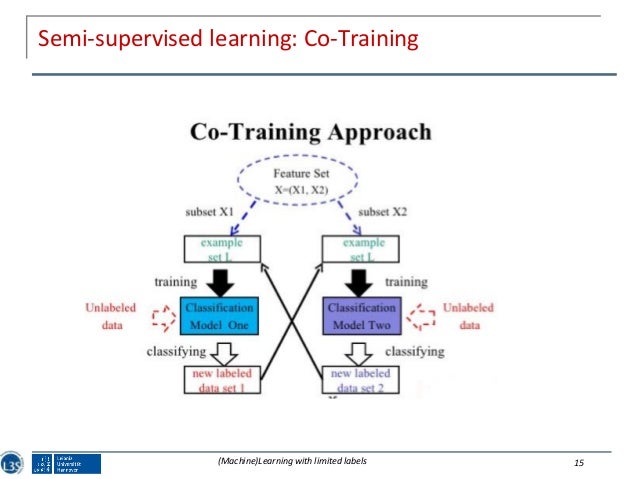

Semi-Supervised Learning With Label Propagation Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data. Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability What is data labeling? - Amazon Web Services (AWS) In machine learning, data labeling is the process of identifying raw data (images, text files, videos, etc.) and adding one or more meaningful and informative labels to provide context so that a machine learning model can learn from it. For example, labels might indicate whether a photo contains a bird or car, which words were uttered in an ... Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

Features and labels - Module 4: Building and evaluating ML ... - Coursera This module explores the various considerations and requirements for building a complete dataset in preparation for training, evaluating, and deploying an ML model. It also includes two demos—Vision API and AutoML Vision—as relevant tools that you can easily access yourself or in partnership with a data scientist. ARIMA for Classification with Soft Labels - Medium In this post, we introduced a technique to carry out classification tasks with soft labels and regression models. Firstly, we applied it with tabular data, and then we used it to model time-series with ARIMA. Generally, it is applicable in every context and every scenario, providing also probability scores. Creating targets for machine learning labels - Python Programming for ... This function will take any ticker, create the needed dataset, and create our "target" column, which is our label. The target column will have either a -1, 0, or 1 for each row, based on our function and the columns we feed through. Now, we can get the distribution: Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Label Smoothing: An ingredient of higher model accuracy These are soft labels, instead of hard labels, that is 0 and 1. This will ultimately give you lower loss when there is an incorrect prediction, and subsequently, your model will penalize and learn incorrectly by a slightly lesser degree.

Machine Learning Model and Its 8 Different Types | Simplilearn This step involves choosing a model technique, model training, selecting algorithms, and model optimization. Consult the machine learning model types mentioned above for your options. Evaluate the model's performance and set up benchmarks. This step is analogous to the quality assurance aspect of application development.

Efficient Learning of Classification Models from Soft-label Information ... soft-label further refining its class label. One caveat of apply- ing this idea is that soft-labels based on human assessment are often noisy. To address this problem, we develop and test a new classification model learning algorithm that relies on soft-label binning to limit the effect of soft-label noise. We

Softmax Function Definition | DeepAI Mathematical definition of the softmax function. where all the zi values are the elements of the input vector and can take any real value. The term on the bottom of the formula is the normalization term which ensures that all the output values of the function will sum to 1, thus constituting a valid probability distribution.

Knowledge distillation in deep learning and its applications Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels.

Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

Regression - Features and Labels - Python Programming Tutorials How does the actual machine learning thing work? With supervised learning, you have features and labels. The features are the descriptive attributes, and the label is what you're attempting to predict or forecast. Another common example with regression might be to try to predict the dollar value of an insurance policy premium for someone.

What is the difference between soft and hard labels? 1 comment 90% Upvoted Sort by: best level 1 · 5 yr. ago Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. 5 More posts from the learnmachinelearning community 601 Posted by 2 days ago Tutorial

The Ultimate Guide to Data Labeling for Machine Learning In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category.

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Is it okay to use cross entropy loss function with soft labels? The sum is taken over the set of possible class labels. In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies.

Learning Soft Labels via Meta Learning - Apple Machine Learning Research Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Post a Comment for "39 soft labels machine learning"